When working with home servers or small business storage systems, managing Logical Volume Manager (LVM) volumes is a common task, especially for those who run Network Attached Storage (NAS) solutions on Linux. These systems are meant to be resilient, scalable, and relatively easy to maintain. However, something as seemingly straightforward as resizing a logical volume can bring everything crashing down if interrupted. That’s exactly what happened in our case — an LVM resize operation was abruptly halted due to a power failure, leaving corrupted metadata and inaccessible volumes in its wake.

TL;DR

During a resize operation on an LVM logical volume in a NAS, a power loss caused the metadata to become corrupted, making the volume unreadable. Recovery was possible thanks to LVM’s built-in metadata backup and restore capabilities. Using vgcfgrestore, we were able to revert the volume group to its previous state before the resize. Understanding how LVM retains metadata backups and how to restore them can save your data in such situations.

What Went Wrong — The Interrupted Resize

LVM provides dynamic resizing capabilities — a significant benefit for systems where workloads and data requirements change over time. Our scenario involved a common operation: extending a logical volume to make use of newly added disk space. While the command appeared routine:

lvextend -L +100G /dev/vg_data/lv_media— the reality was anything but ordinary. Right after initiating the resize, a sudden power outage hit the NAS. Although the system had some level of UPS protection, the power window wasn’t enough for the operation to complete cleanly. When the system was restarted, attempts to mount the logical volume resulted in I/O errors. On further inspection, we found corrupted metadata — LVM could not locate or mount several logical volumes properly.

To understand what happened, it’s important to grasp how LVM operates at a high level.

How LVM Metadata Works

LVM uses metadata to define and manage Volume Groups (VGs), Physical Volumes (PVs), and Logical Volumes (LVs). This metadata is stored in two places:

- On disk—within specific areas of the physical volumes in the volume group

- In backup files—typically found in

/etc/lvm/archive/and/etc/lvm/backup/

The on-disk metadata reflects the current state, while the backup files provide snapshots of configurations at specific points in time. When a volume resize is interrupted, inconsistencies can appear in the on-disk metadata — possibly leaving logical volumes in a half-configured, unrecognizable state.

Recognizing the Signs of Corruption

Upon reboot, the typical signs of LVM metadata corruption may include:

- Failure to activate volume groups automatically

- Errors such as “metadata area descriptor checksum mismatch”

- Logical volumes no longer listed in

lvdisplay - Inability to mount file systems on affected volumes

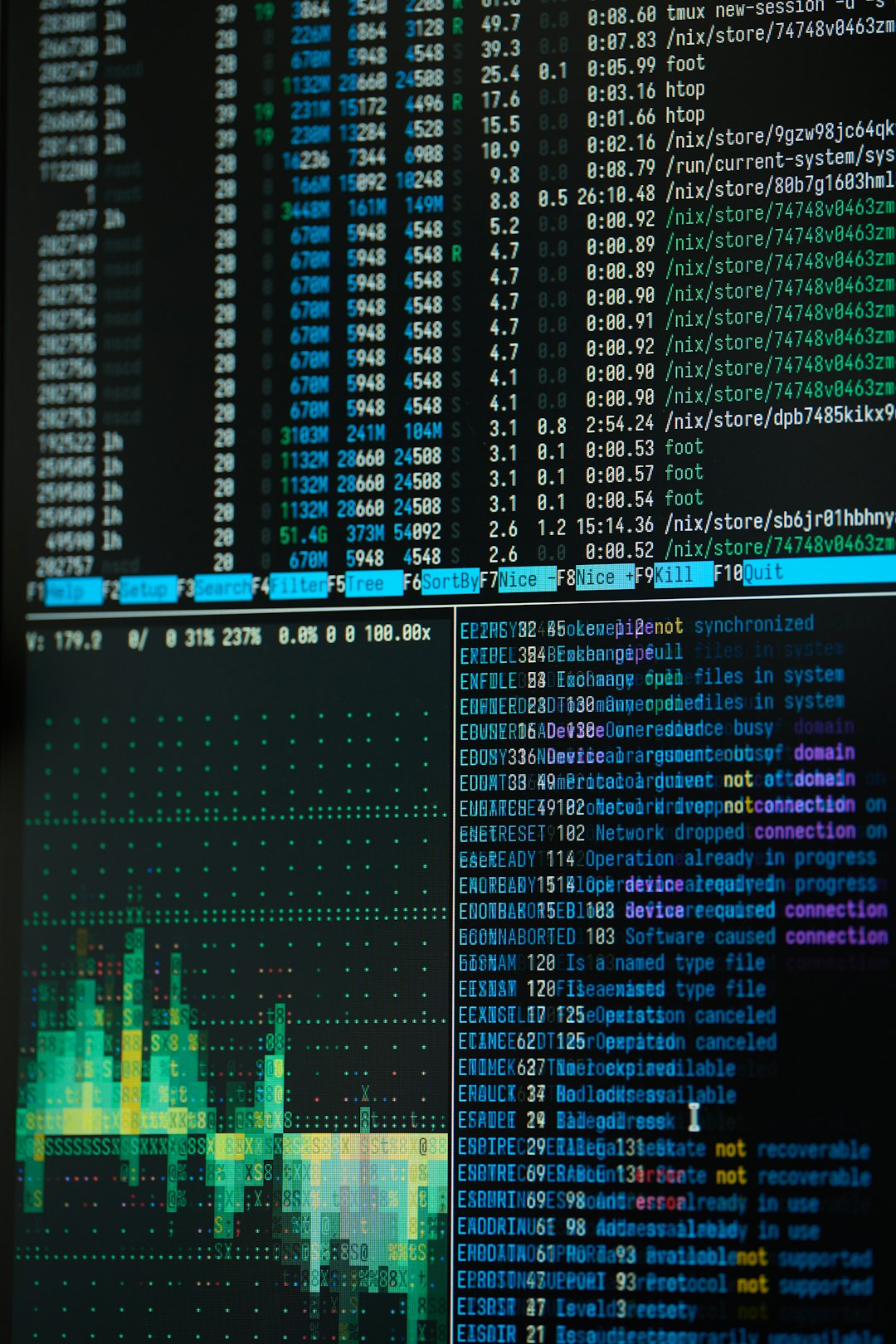

Using diagnostic tools like pvscan, vgscan, and lvscan gave us incomplete or inconsistent results. It became clear that our best hopes lay in the backups stored within /etc/lvm.

The Step-By-Step Metadata Recovery Process

Thankfully, LVM saves metadata snapshots automatically after major changes, including volume resizing. Here’s how we rebuilt the logical volumes and recovered data without restoring from a full backup.

1. Identifying the Right Metadata Backup

The first step was to check the metadata archives:

ls -l /etc/lvm/archive/These automatically named files include timestamps, helping you locate the backup created before things went wrong. Inside each archive, you’ll find a configuration file like vg_data_00007.vg that contains the volume group’s LVM metadata.

To inspect the contents:

more /etc/lvm/archive/vg_data_00007.vgLook for logical volume entries and their sizes. These should reflect the state before the interrupted resize. Once you identify the correct point, you’re ready to restore.

2. Previewing the Restore

It’s a good idea to run a preview before applying any potentially destructive changes:

vgcfgrestore -t -f /etc/lvm/archive/vg_data_00007.vg vg_dataThis -t flag performs a dry run. Read the output carefully to ensure that LVM recognizes all the desired logical volumes and their original sizes.

3. Restoring the Metadata

With caution and confidence, we restored the metadata:

vgcfgrestore -f /etc/lvm/archive/vg_data_00007.vg vg_dataThis command rewrote the volume group’s metadata to match the pre-resize state. After successful execution, it was necessary to re-scan the devices and activate the volume group:

vgscan

vgchange -aySuddenly, our logical volumes were visible again under /dev/vg_data/.

4. Running Filesystem Checks

The logical volume may appear structurally intact, but filesystems might have suffered damage during the interrupted write operation. We ran fsck on the affected logical volume to repair any inconsistencies:

fsck /dev/vg_data/lv_mediaAfter this step, the volume mounted successfully, and our NAS was back online.

Lessons Learned and Best Practices

This incident served as a hard lesson in system resilience and preventive strategy. Here are a few takeaways and recommendations:

- Always use an uninterruptible power supply (UPS), especially when resizing volumes or performing other sensitive operations.

- Understand where your LVM backups are stored and verify their presence regularly. Files in

/etc/lvm/archivecan be lifesavers. - Run a dry run of

vgcfgrestorebefore doing actual metadata restoration. - Keep full system backups in addition to counting on LVM redundancy — it’s better to need them and not use them than the opposite.

Conclusion

Volume management is one of the most powerful aspects of Linux-based storage systems, but it comes with complexity that requires respect. In our case, the abrupt power loss during an LVM resize left our volume metadata in disarray. Thanks to LVM’s thoughtful design and built-in archive features, we were able to trace, preview, and restore the previously working configuration. Add a bit of fsck magic, and we had our NAS functional once more.

Sometimes these failures offer the best learning experience. With vigilance and backup awareness, you can prevent data loss and navigate your way out of most volume corruption scenarios.