Google’s revelation of its Gemini model has sent shockwaves through the tech community. This new AI has people really excited because it’s shown to do better than GPT-4 in tests and can interact amazingly well with videos in real-time. But let’s pause and take a closer look at what’s happening behind the scenes of this highly-touted technology.

Google Gemini Release

The recent reveal of Google’s Gemini model has been nothing short of remarkable. It’s been dubbed as an advancement and most capable model, making GPT look like a mere toy in comparison. The Gemini Ultra model, in particular, managed to outshine GPT-4 across numerous benchmarks, showcasing its prowess in reading comprehension, math, spatial reasoning, and more.

However, there’s a catch.

It’s December 8th, 2023, and here at the Code Report, a deeper dive into Gemini’s capabilities reveals a different narrative.

Decoding Google’s Gemini Hands-On Demo

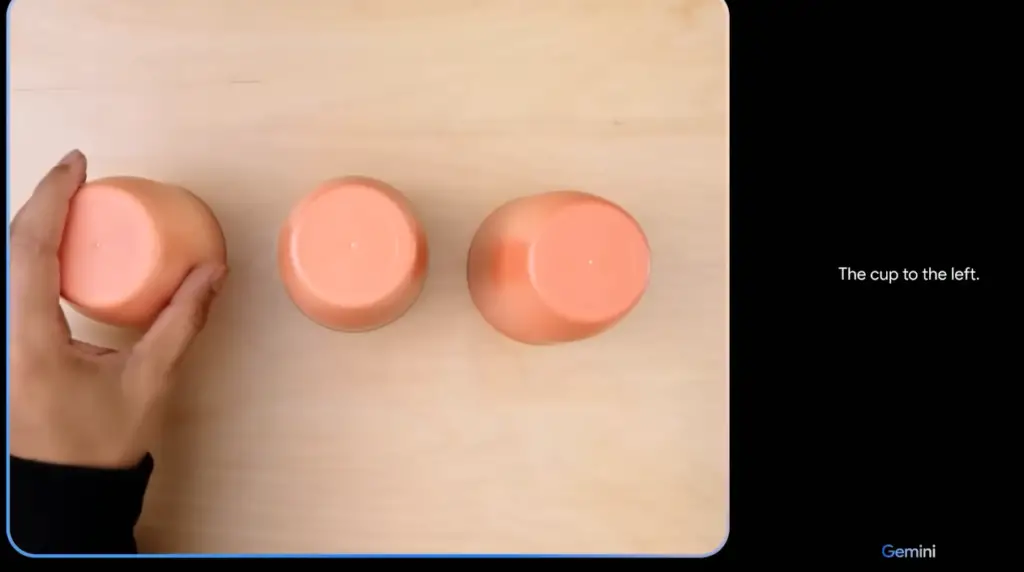

The demonstration featuring Gemini’s interaction with a live video feed, playing games like ‘one ball three cups,’ appears groundbreaking. But here’s the twist and it’s not exactly what it seems.

This interaction isn’t solely about an AI comprehending and reacting to a live video stream; rather, it involves complex multimodal prompting.

While Google presents these demos in a captivating light, the actual engineering behind the scenes is more intricate.

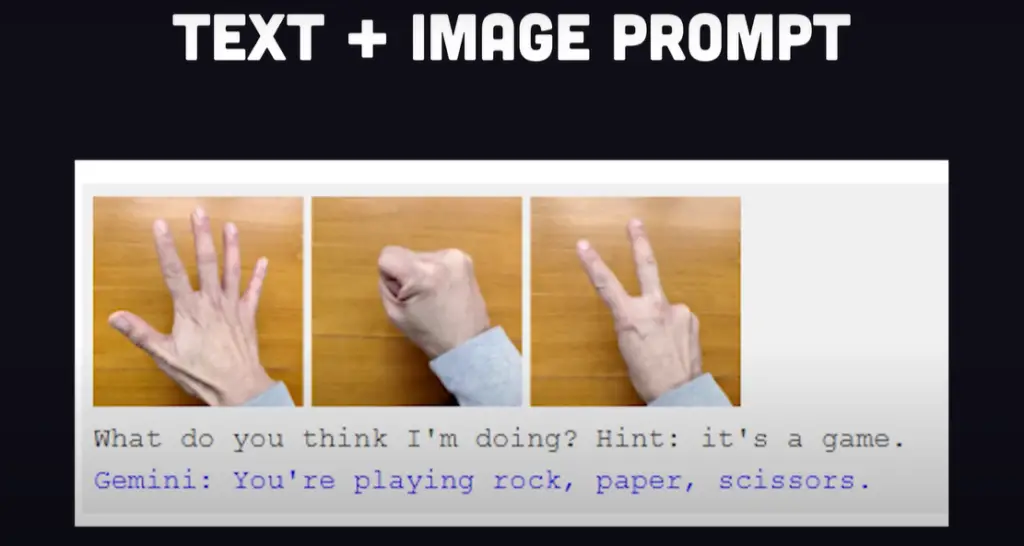

For example, in the rock-paper-scissors game, Gemini receives explicit hints that it’s engaging in a game, making the task seemingly straightforward. Yet, GPT-4 also handles similar prompts with ease.

Digging into Benchmarks: The Controversy

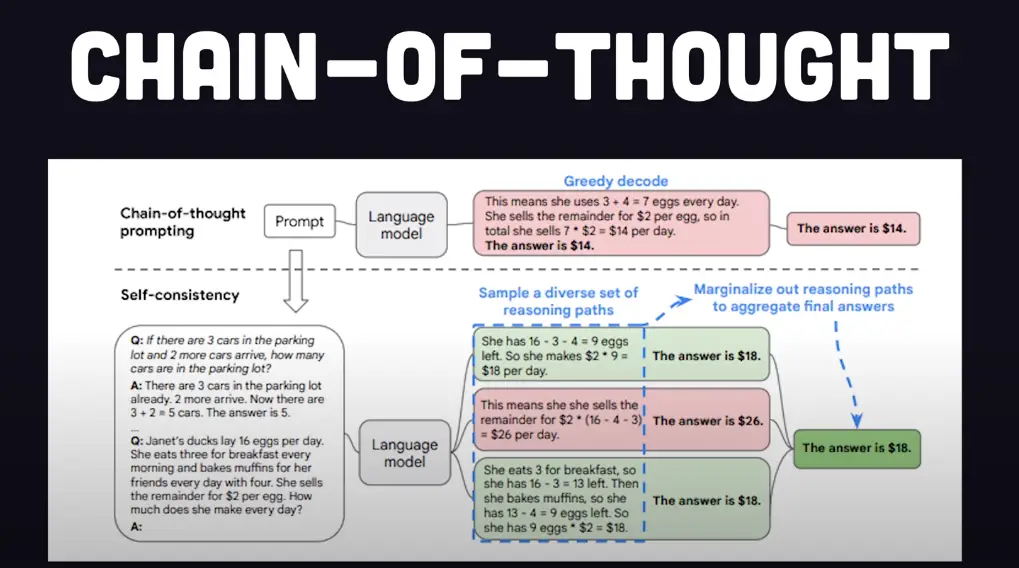

Gemini’s claimed superiority over human experts in the massive multitask language understanding benchmark raises eyebrows. The comparison between Chain of Thought 32 and the 5-shot Benchmark with GPT-4 seems perplexing at first glance.

The technical paper dives into the intricacies, revealing that the 5-shot Benchmark requires the model to generalize complex subjects based on a very limited set of specific data.

However, the Chain of Thought methodology involves numerous intermediate reasoning steps before the model selects an answer.

Examining the Numbers and Skepticism

Upon closer examination, the benchmarks paint a conflicting picture. While Gemini excels in certain areas, its performance falls short in others. The discrepancy in benchmarks and their evaluation methods raises skepticism. Trusting benchmarks that lack neutrality or third-party validation can be a slippery slope.

Conclusion on Google Gemini AI Fake

Gemini AI might seem amazing, but there’s more to it than meets the eye. While Google’s AI looks great, judging it only by tests or fancy videos isn’t enough to know how good it really is.

The world of AI keeps changing, and even though Gemini seems promising, it’s smart to question it until we understand it better. While we enjoy these cool tech AI models, it’s essential not to believe everything they show us without thinking about it first.

In closing, this has been the Code Report, shedding light on Google’s Gemini AI. Thank you for tuning in, and until next time, stay curious and critical of the tech wonders that surround us.