Artificial Intelligence is growing day by day and achieving milestones, whether you’ll see AI Chatbots like ChatGPT, Google Bard, Claude, and many more. However, there’s a major problem with these AI models struggling with memory. Yes, memory is very critical and becomes very important during model training.

Many AI models have a limited context window, which means they can only consider a fixed amount of information when generating responses.

Memory constraints pose a significant challenge in the development of AI systems, whether it’s AI Chatbots, LLMs, or any other models.

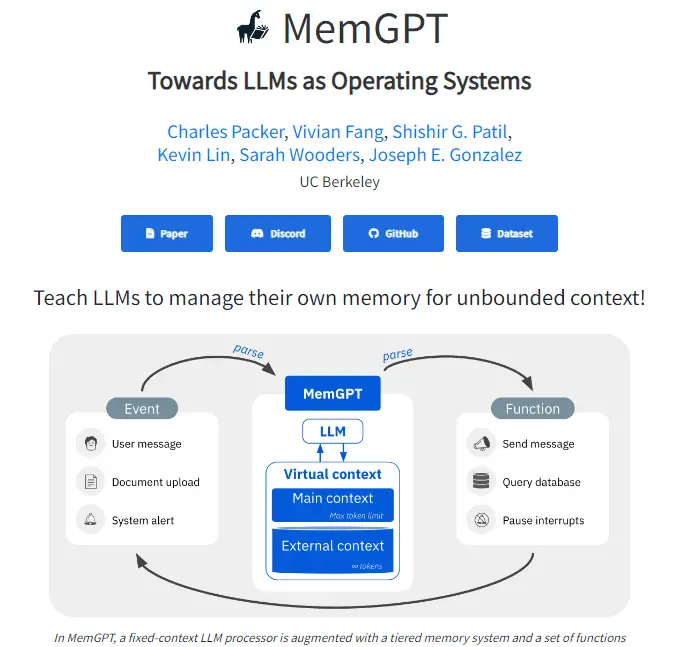

I recently found a research paper called “MemGPT: Towards LLMs as Operating Systems,” which provides solutions to the memory constraint. This paper aims to address this limitation.

In this article, we’ll dive into the key aspects of MemGPT, explore the research paper, and guide you through the installation process for MemGPT.

What is MemGPT?

MemGPT is an AI project that aims to improve the memory capabilities of artificial intelligence models. It enables AI systems to effectively remember and recall information during conversations, making them better at tasks like long-term chat and document analysis.

MemGPT achieves this by emulating the memory hierarchy of a computer’s operating system, allowing AI to manage its memory autonomously, providing an illusion of an extended context window for more coherent and context-aware interactions.

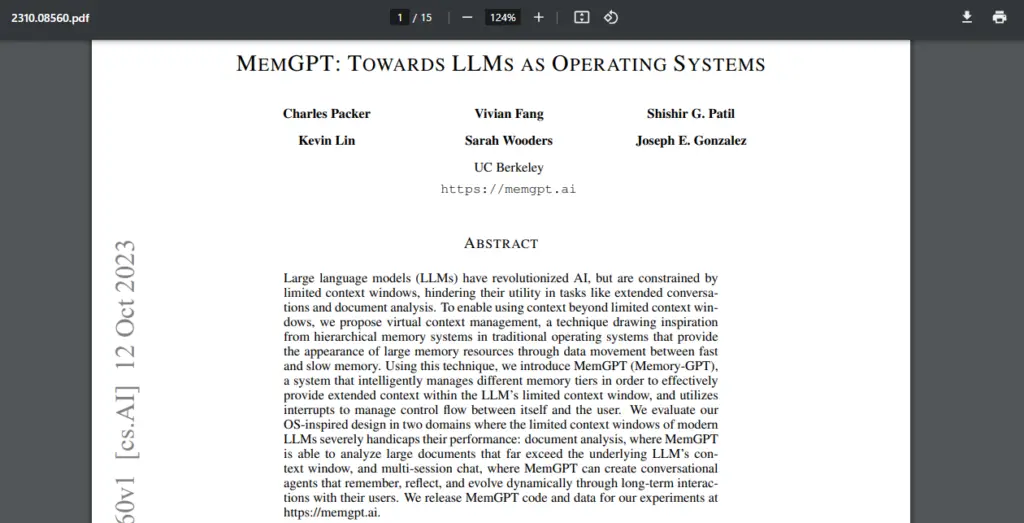

MemGPT Research Paper

The Challenge of Limited Memory in AI:

Artificial intelligence models, such as GPT (Generative Pre-trained Transformers), have revolutionized the field by generating human-like text based on the context they are given.

However, these models suffer from a critical limitation – they have very limited memory. Essentially, AI models can only consider the information present in their training data and have a restricted context window.

For instance, GPT-4 models can handle up to 32,000 tokens, but this is still quite limiting.

Two specific use cases highlight the constraints imposed by limited context windows:

- Long-Term Chat: Engaging in a chat conversation that spans weeks, months, or even years while maintaining context and coherence can be challenging with a limited context window.

- Chat with Documents: If you need to refer to extensive documents or datasets during a conversation, the context window quickly becomes a bottleneck. Storing and recalling this information within a conversation is challenging.

The Solution: MemGPT

The research paper, “MemGPT: Towards LLMs as Operating Systems,” presents an innovative solution to the problem of limited memory in AI models.

This project, developed by a team at UC Berkeley, introduces a concept called a “virtual context management system.” This system is designed to emulate the memory hierarchy of an operating system.

Just like a computer operating system manages different types of memory (CPU, RAM, and long-term storage), MemGPT proposes a similar hierarchy for large language models (LLMs):

- Main Context: This represents the standard context window, which has a predefined token limit (similar to the fixed context in traditional models).

- External Context: This is where MemGPT introduces a groundbreaking feature. Unlike the main context, the external context has virtually unlimited tokens and can store extensive information.

The core idea behind MemGPT is to provide the illusion of infinite context while still using fixed context models.

In other words, MemGPT can seamlessly access and integrate information from external contexts when needed. This memory management is automated and executed through function calls.

Autonomous Memory Management

One of the remarkable aspects of MemGPT is its ability to autonomously manage its own memory. It can perform the following functions:

- Retrieve memory from an external context.

- Edit its existing memory.

- Yield results to provide responses.

This design allows MemGPT to make repeated context modifications during a single task, enhancing its ability to utilize its limited context effectively.

A Closer Look at Memory Management

The memory management process in MemGPT involves the following components:

Inputs: These include messages from users, documents uploaded during the conversation, system messages, and timers.

Virtual Context: This is where the magic happens. It includes the main context with a fixed token limit and the external context, which offers unlimited tokens and context size.

LLM Processor: This is the heart of MemGPT, responsible for processing language and generating responses based on the provided context.

Output: This is the final response that MemGPT generates.

The Potential of MemGPT

MemGPT’s approach to memory management offers exciting possibilities for AI applications. It opens the door to more robust and coherent long-term chat interactions and makes it feasible to engage in complex conversations involving extensive documents and data.

The key differentiator is that MemGPT achieves this without incurring prohibitively high computational costs, which would be the case if context windows were simply expanded.

MemGPT Use Cases:

MemGPT isn’t just a theoretical concept. It has practical applications, especially in two key use cases: document analysis and multi-session chat.

Document analysis enables you to have a coherent conversation with an AI model while referencing extensive documents, which would be impossible with traditional models.

Multi-session chat allows for extended conversations that can span days, weeks, months, or even years, maintaining consistency and relevance.

MemGPT Installation Tutorial

Now that we’ve explored the research paper and the concept of MemGPT, it’s time to get hands-on experience by installing and using MemGPT.

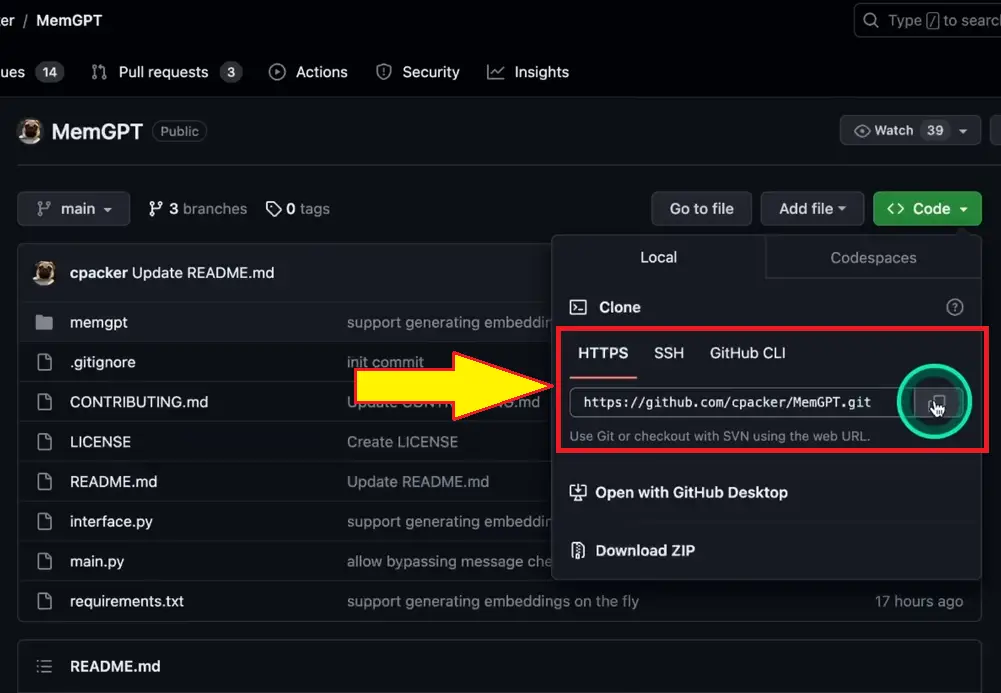

The authors of MemGPT have generously provided the code for this project, making it accessible to the AI community.

Prerequisites:

Before we dive into the installation process, there are a few prerequisites to ensure you have everything you need:

- GitHub Account: If you don’t already have a GitHub account, create one. It’s where you’ll access the MemGPT code.

- Conda (Optional): While not mandatory, using Conda for managing Python environments is highly recommended for this and other AI projects.

Installation Steps

Follow these steps to install MemGPT:

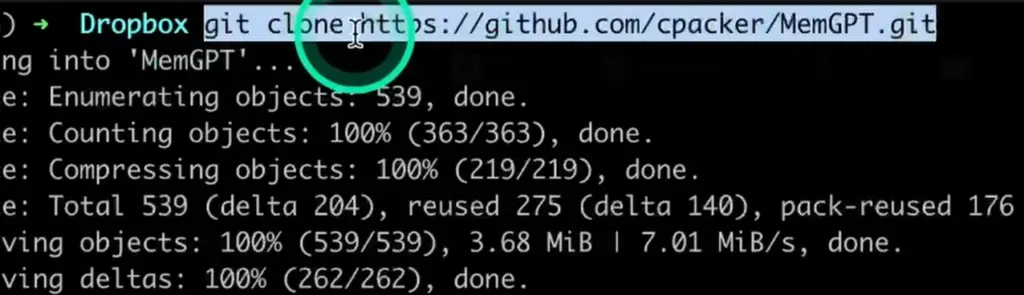

Step 1: Clone the Repository

Visit the MemGPT GitHub repository: MemGPT GitHub

Click on the green “Code” button and copy the GitHub URL.

Open your terminal or command prompt and use the git clone command to clone the repository.

git clone <GitHub URL>Step 2: Create a Conda Environment (Optional)

- If you choose to use Conda, create a new Conda environment by running the following command:

conda create -n memgpt python=3.10Step 3: Activate the Conda Environment (Optional)

- Activate the Conda environment with the following command:

conda activate memgpt

Step 4: Navigate to the MemGPT Directory

- Change your current directory to the MemGPT folder you cloned:

cd memgptStep 5: Install Requirements

- Install the required packages using pip. This will ensure you have all the dependencies needed to run MemGPT:

pip install -r requirements.txtStep 6: Set Up Your OpenAI API Key

- To use MemGPT, you need an OpenAI API key. If you don’t have one, you can sign up on the OpenAI platform and generate an API key.

- Set your API key as an environment variable:

export OPENAI_API_KEY=<Your API Key>

Step 7: Running MemGPT

Now you’re ready to interact with MemGPT. The provided documentation in the MemGPT repository contains specific commands for various use cases. For example, you can perform document retrieval by using a command like:

python3 main.py D- archival storage files compute embeddings=<Path to Your Documents>

This command will compute embeddings for the specified documents and allow you to ask questions based on this information.

Please note that MemGPT is an evolving project, and additional features are being added to make it more user-friendly and cost-effective.

For the most up-to-date information and assistance, you can join the MemGPT community on Discord, where the developers are actively engaged with users.

Conclusion

MemGPT is a promising step forward in addressing the memory limitations of AI models. The ability to manage memory effectively and autonomously opens up new possibilities for long-term chats, document analysis, and complex AI interactions.

By following the installation steps provided, you can start experimenting with MemGPT and discover its potential for yourself.

As the project continues to evolve, we can expect even more exciting developments in the world of artificial intelligence and natural language processing.

Stay tuned for updates and enjoy exploring the future of AI memory management with MemGPT.

MemGPT official link is here.

Latest AI Tutorials: