DragGAN AI, an innovative photo editor powered by advanced artificial intelligence technology.

With DragGAN AI, all you need to do is click on the image to define pairs of handle points and target points.

Let’s dive into the workings of DragGAN AI and understand the underlying formulas in a simple and friendly way.

Understanding the StyleGAN2 Architecture:

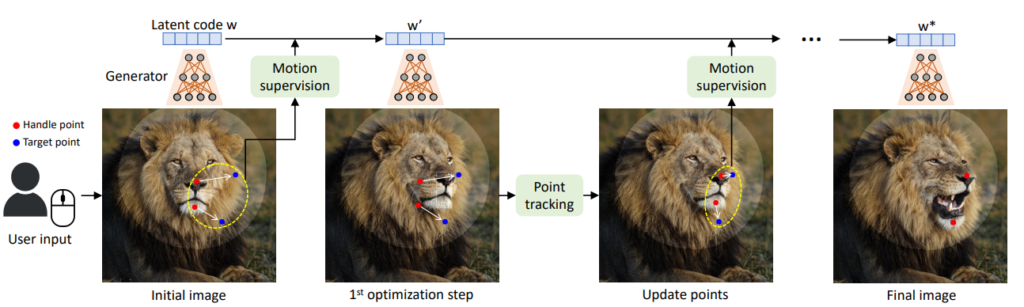

DragGAN AI is built upon the StyleGAN2 architecture, which utilizes a latent code 𝒛 to generate images. This latent code is transformed into an intermediate latent code 𝒘, which is then fed into the generator 𝐺 to produce the final image. The generator uses different layers to control various attributes of the image, and it can be seen as modeling an image manifold.

Interactive Point-based Manipulation:

Now, let’s focus on the interactive point-based manipulation feature of DragGAN AI. Here’s how it works:

Handle Points and Target Points:

When you upload an image, you can choose certain points on the image called handle points {𝒑𝑖}, and their corresponding target points {𝒕𝑖}. These points represent specific features or objects you want to manipulate. For example, you might select the nose and the jaw of a person in the image.

Optimization Process:

DragGAN AI performs image manipulation through an optimization process. In each optimization step, two sub-steps are carried out:

a. Motion Supervision:

A loss function is employed to move the handle points closer to their corresponding target points. This loss guides the optimization process. However, the exact length of the step is uncertain and varies depending on the object and its parts. So, it’s like taking small steps towards the desired outcome.

b. Point Tracking:

After each optimization step, the positions of the handle points are updated to accurately track the corresponding points on the object. This ensures that the right points are supervised in the subsequent motion supervision steps.

Iterative Process:

The optimization process continues iteratively until the handle points reach their target positions, typically taking 30-200 iterations. You can also stop the optimization at any point and evaluate the results. If you’re not satisfied, you can input new handles and target points and continue editing until you achieve the desired outcome.

How to Use DragGAN AI photo editor

Motion Supervision

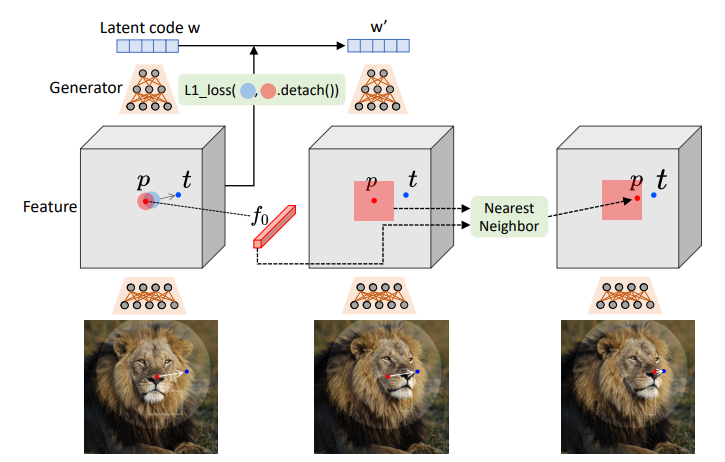

The key challenge in supervising point motion for a GAN-generated image is how to formulate an effective motion supervision loss. In DragGAN AI, a motion supervision loss is proposed without relying on additional neural networks. Here’s the idea behind it:

- Feature Maps: The generator produces feature maps (F) at various stages of image generation. The feature maps after the 6th block of StyleGAN2 are found to be highly discriminative and suitable for motion supervision.

- Motion Supervision Loss: To guide the movement of a handle point towards its target, DragGAN AI employs a shifted patch loss. It focuses on a small patch around the handle point and aims to move it closer to the target by a small step. The loss equation incorporates this patch loss and a reconstruction loss if a binary mask (M) is provided. The reconstruction loss ensures that the unmasked region remains fixed.

- Optimization Step: At each motion supervision step, the latent code (𝒘) is optimized using this loss for one step. The gradient is not back-propagated through the feature maps, which encourages the handle points to move toward the targets.

DragGAN AI revolutionizes photo editing by combining interactivity and advanced AI techniques. It empowers you to easily manipulate specific features in your images with just a few clicks. Whether it’s refining facial features, adjusting objects, or enhancing the overall composition,

Image and content Reference: https://vcai.mpi-inf.mpg.de/projects/DragGAN/data/paper.pdf

Also Read:

- Why You Should Learn How to Use AI for Picture Generation and Editing

- SlideModel AI Review: Create Professional Presentations in Seconds with PowerPoint-Ready Slides

- Can I use a dedicated server for game hosting?

- How can I optimize landing pages for better loading speed with a generator?

- How can AI assist in code pattern recognition?