Mistral AI new France AI startup, captured headlines when it raised $113 million in funding back in June 2023, even before it had a product to showcase.

Fast forward to today, and Mistral AI has taken a giant leap forward by releasing not one but two impressive open-source language models, each boasting a staggering 7 billion parameters.

In this article, we’ll dive into the details of Mistral AI’s release, explore the motivation behind it, and provide you with a step-by-step guide on how to use these models effectively.

Introducing Mistral AI

Mistral AI’s journey began with a bang as it secured a significant amount of funding without having a product ready for the market.

What makes Mistral AI’s release even more intriguing is its commitment to open source. They have made their 7 billion parameter models, named ‘Mral,’ available for public use without any restrictive licenses.

Understanding Mistral AI’s Motivation

Mistral AI’s mission is clear: to bring open AI models to the forefront of AI. This goal is akin to the development of open-source tools like WebKit, the Linux operating system, and Kubernetes, which have played pivotal roles in shaping the digital world we know today.

Their approach is ambitious and aligns with the idea that the AI community needs to collaborate and share resources to foster growth.

How to Use LLaMA 2 AI Online Version?

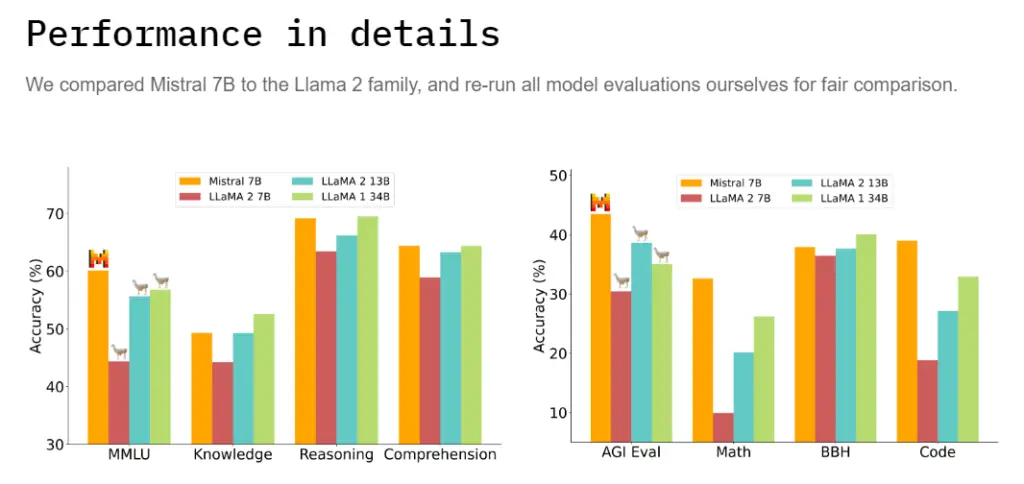

Performance That Speaks Volumes

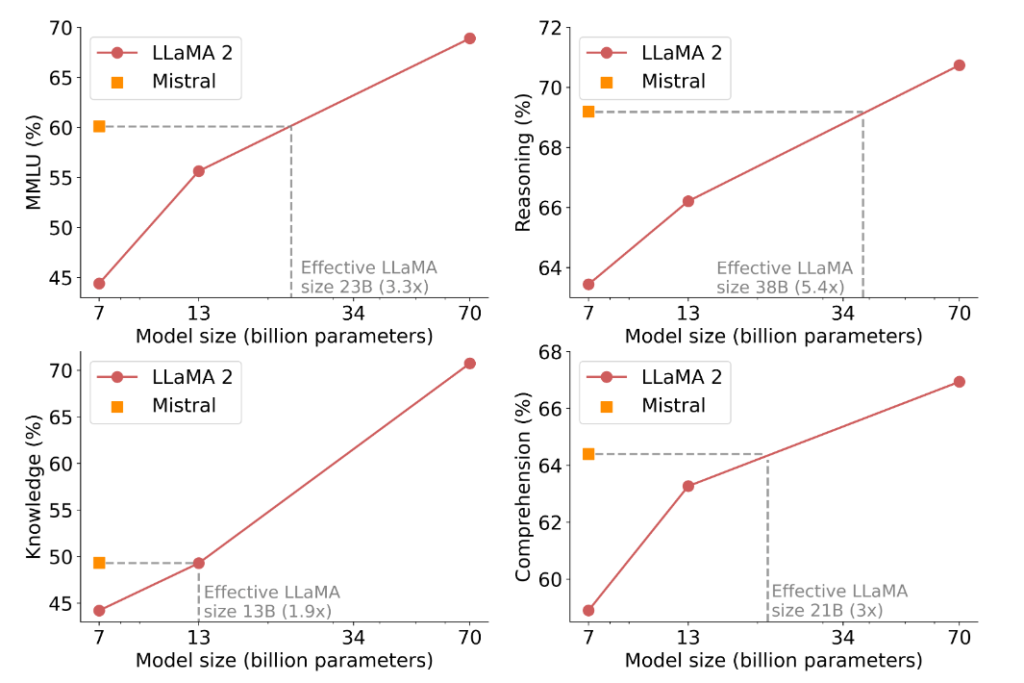

Mistral AI’s claim to fame with its 7 billion parameter models lies in its impressive performance. According to the company, these models outperform existing open models, including the renowned Llama 27 billion parameter models, in various benchmarks.

The real surprise is that in some benchmarks, Mistral AI’s 7 billion parameter models even surpass the performance of the 13 billion parameter models.

The Small Model Revolution

Mistral AI’s release of a 7 billion parameter model also highlights the growing importance of smaller AI models. While much attention has been focused on massive models, there’s a growing recognition that smaller, more efficient models have their place in the AI landscape.

Microsoft, for instance, has been actively working on developing smaller models that can run efficiently on single GPUs and edge devices. This shift underscores the idea that AI models need not always be colossal; they should be customized to the specific needs and constraints of various applications.

Mistral AI’s commitment to tracking and showcasing small model performance sends a clear message – smaller models can achieve remarkable results with the right approach and optimization.

Licensing Freedom

One of the standout features of Mistral AI’s release is its commitment to open source and licensing freedom. The models come with an Apache 2.0 license, ensuring that users can employ them without any restrictions.

This openness allows developers and researchers to utilize the models for a wide range of applications and research, fostering innovation and collaboration within the AI community.

Mistral AI has also made its code available on GitHub, providing a valuable resource for those interested in delving deeper into their techniques.

Whether it’s exploring sliding window attention mechanisms or understanding their novel approaches to faster inference, the company’s GitHub repository is a treasure trove of AI knowledge.

How to Use Mistral AI’s 7 Billion Parameter Models

Now that we’ve explored the significance and potential of Mistral AI’s 7 billion parameter models, let’s dive into a step-by-step guide on how to use them effectively.

Step 1: Access the Models

Mistral AI has made its models accessible and user-friendly. You can find detailed information about the models, their architecture, and usage guidelines on their official website and GitHub repository.

Step 2: Choose the Right Model

Mistral AI offers two versions of its 7 billion parameter model: the Instruct Model (ftuned) and the Base Model. Determine which model aligns better with your project requirements and objectives.

Step 3: Set Up Your Environment

To use Mistral AI’s models, ensure you have a suitable environment in place. Depending on your preferences and familiarity, you can choose between direct usage of the models or use popular libraries like Hugging Face Transformers, which may simplify the integration process.

Step 4: Installation and Configuration

If you opt for direct usage, follow Mistral AI’s installation and configuration instructions provided in their GitHub repository. Make sure you have all the required dependencies and libraries installed.

Step 5: Model Loading

Load your chosen model into your Python environment. Mistral AI’s documentation provides detailed instructions on how to load the models efficiently.

Step 6: Input and Inference

With the model loaded, you can now start using it for your specific tasks. Input your data and make inferences using the model’s capabilities. Be sure to follow Mistral AI’s recommendations for optimal performance.

Step 7: Experiment and Iterate

As with any AI model, it’s essential to experiment and iterate to fine-tune its performance for your specific use case. Try different input data, adjust parameters, and monitor results to achieve the desired outcomes.

Official Links:

| Mistral AI Hugging Face | huggingface.co/mistralai/Mistral-7B-v0.1 |

| Mistral AI Github | github.com/mistralai/mistral-src |

Conclusion

Mistral AI’s release of its 7 billion parameter language models represents a significant milestone in the world of AI. Their commitment to open source, licensing freedom, and a focus on smaller models sets them apart in an industry often dominated by proprietary technology and massive models.

While their claims of outperforming existing models are impressive, independent assessments and real-world applications will provide a more comprehensive view of their capabilities. Regardless, Mistral AI’s dedication to pushing the boundaries of open AI models is commendable and aligns with the evolving needs of the AI community.