MemGPT has been making significant progress since its research paper review and introductory tutorial. The team behind MemGPT AI has already released support for local models and integration with AutoGen.

In this article, we will walk you through the process of using an open-source local model with MemGPT. This will allow you to use the power of MemGPT for various applications.

We’ll also provide a brief guide on running MemGPT with RunPod.

Setting Up MemGPT with Open-Source Models on RunPod

While we’ll focus on RunPod for this guide, you can follow a similar process on your local machine.

Here’s a step-by-step walkthrough:

1. Deploying on RunPod

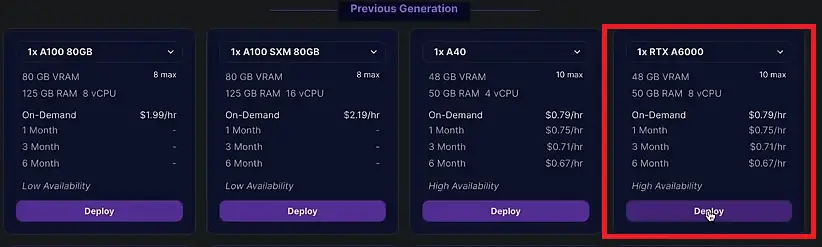

Access RunPod and click on “Secure Cloud.”

Scroll down to select a GPU. You can choose a GPU that suits your needs.

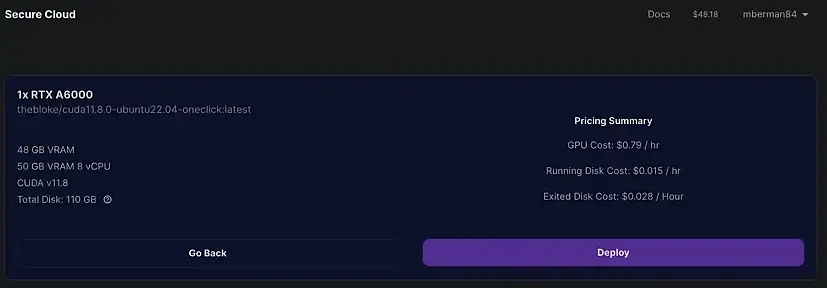

Click “Deploy” to initiate the deployment process. There’s no need to change any other settings. The default configuration works fine.

After deployment, wait for the instance to load fully.

2. Connect to HTTP Service

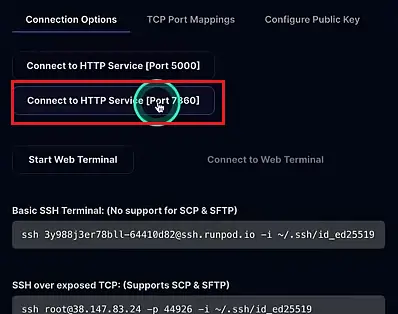

- Click “Connect” to establish a connection.

- Select “Connect to HTTP Service.”

- Use Port 7860 as the interface for the API.

3. Download the Model

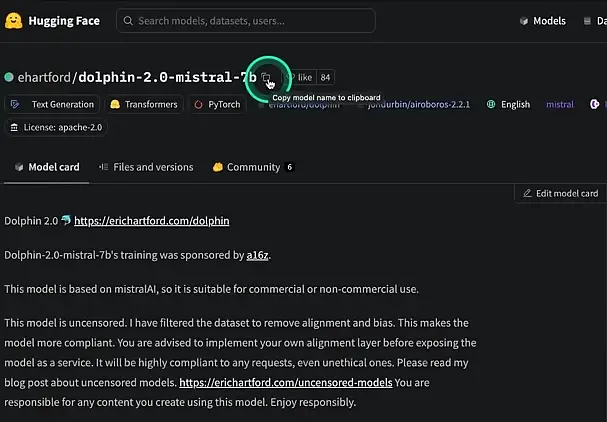

The model we will use in this guide is “Dolphin 2.0 mistral 7B.” While the “Aeroboros” prompt template is recommended for the best results, we’ll use the smaller model to demonstrate the process.

Copy the model card info.

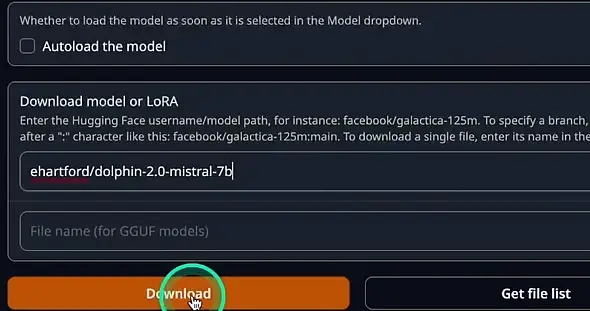

Navigate to the “Model” tab in the Text Generation Web UI.

Paste the model card info.

Click “Download” to initiate the model download. This process is similar whether you’re using RunPod or your local machine.

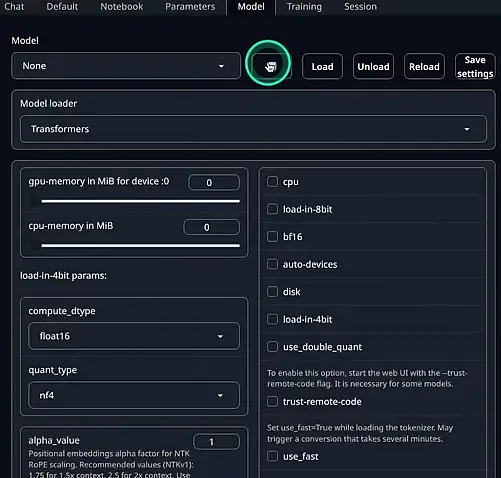

4. Load the Model

To load the model into memory, use the Model Loader Transformers.

- Click the refresh button to load the model you just downloaded.

- Select the new model by clicking on “none” and then back to the new model.

- Click “Load” to load the model successfully.

5. Set Backend Type

Navigate to the “Session” tab and set the backend type to “web UI.” There’s no need to enable any flags or apply extensions.

6. Copy the API Endpoint URL

Copy the URL of your RunPod instance, which will serve as the API endpoint for MemGPT.

Installing MemGPT

Now that you have set up your environment on RunPod, follow these steps to install MemGPT and connect it to the open-source model:

Clone the MemGPT repository from GitHub:

git clone <GitHub URL>Alternatively, the authors have provided a MemGPT module for your convenience, eliminating the need to clone the repository.

Change your current directory to the MemGPT directory:

cd mgptExport the API endpoint as the base URL for MemGPT. Be sure to use the correct port (5000) for the API:

export OPENAIOR_BASE=<RunPod URL>:5000Set the backend type to “web UI”:

export BACKEND_TYPE=web UICreate a Conda environment and install the required packages. If you already have a Conda environment, you can skip this step:

conda create -n auto-mem-gpt python=3.13Activate the Conda environment:

conda activate auto-mem-gptInstall the necessary requirements:

pip install -r requirements.txtStart MemGPT and verify the installation:

python3 main.py -noore verify If it’s your first time running MemGPT, you may need to set it up from scratch.

Using MemGPT with Open-Source Models

Now that MemGPT is set up with the open-source model, you can start using it. Here’s a simple example:

Initiate MemGPT by running the following command:

python3 main.py

Select the appropriate options, such as the model (GPT-4), Persona (e.g., Sam), and user type.

Let MemGPT run, and you can interact with it by typing your prompts and receiving responses.

Conclusion:

In conclusion, MemGPT offers a versatile platform for working with open-source models. By following the steps outlined in this guide, you can get started with MemGPT and explore its potential for text generation and more.

Stay updated with MemGPT’s progress as it continues to evolve and offer more advanced features.

FAQs

1. What is MemGPT?

MemGPT is a powerful AI language model developed by a dedicated team. It allows you to use local models, including open-source ones, to perform various text generation tasks.

2. Can I run MemGPT on my local machine?

Yes, you can use MemGPT on your local machine by following a similar setup process as described in this guide. You’ll need to download the required model and set up the environment accordingly.

3. What is the advantage of using open-source models with MemGPT?

Using open-source models with MemGPT provides you with more flexibility and control over the models you use. It allows you to customize your AI interactions to suit your specific needs.

4. How do I choose the right model for my task?

The choice of the model depends on your task and requirements. It’s recommended to experiment with different models to find the one that works best for your specific use case.

5. Are there plans for more advanced features in MemGPT?

The development of MemGPT is ongoing, and the team is constantly working to improve and expand its capabilities. Stay tuned for updates and new features in future releases.